I can still clearly remember when object-oriented programming started to become the standard model, moving away from structural procedural code. I used to think to myself that new programmers, just starting in C++ or Java had it easier than guys like me, who started in Pascal and Basic and needed to relearn how to do things. In fact, I often found myself doing things the wrong way in C++ because I had just learned C and so I tried to fit my structural procedural brain trained on C, into OO, not unlike a square peg into a round hole.

Same thing when I learned data warehousing, star schemas and multidimensional modeling. I came from the standard 3NF OLTP world into OLAP and found that the learning curve was such that I would find it easier to start in the OLAP world instead of relearning new ways of expressing data.

I find this all very similar to where we are now in the NoSQL world. There are obvious use cases for NoSQL data stores such as caching, storing unstructured data, log events, etc. And so it makes sense to move or create apps that may have been using MySQL or any other lock-based serializable ACID RDBMS.

But you also limit yourself to the tools available for input, update and retrieval. And since I’m not here to write Java or Javascript to get or put data into a data store, I greatly appreciate tools that make interacting with a NoSQL data store like MongoDB easy and familiar to my SQL-ized brain. Many BI, OLAP and ETL tools just don’t speak to NoSQL data stores.

Here is an example of using Pentaho Data Integration (PDI, aka Kettle) to connect to my MongoDB collection, insert data and then report on that data, all within the Pentaho Business Analytics suite. If you’d like to try this out, just download the Pentaho BA Suite evaluation from http://www.pentaho.com. All of these tools are available in the Pentaho Business Analytics Suite.

First, I created my database and collection (table) in MongoDB, calling the DB Demo and the collection or table “Sales” from the MongoDB command prompt:

MongoDB shell version: 2.4.5

connecting to: test

> use Demo;

switched to db Demo

> db.createCollection(“Sales”);

{ “ok” : 1 }

>

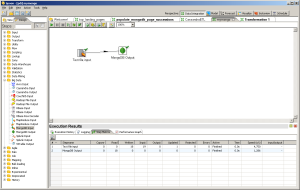

That’s all that I had to do to set up my data store in Mongo. The rest was done in Pentaho. But for now, it’s time to get a spreadsheet of data from my sales.csv into Mongo using the PDI tool from Pentaho. I will first create a transformation (the T in ETL):

My source is a simple CSV file of sales that looks like this:

EmpID,LastName,Sales,SalesDate

1,Kromer,12,1/1/2012

2,Smith,12.99,1/2/2012

3,Jones,1.99,1/3/2012

4,Kumar,120.5,1/4/2012

5,Yung,50,1/5/2012

6,Basero,5,1/1/2012

1,Kromer,25.25,1/2/2012

2,Smith,12,1/3/2012

3,Jones,12.99,1/4/2012

4,Kumar,1.99,1/5/2012

5,Yung,120.5,1/1/2012

6,Basero,50,1/2/2012

1,Kromer,5,1/3/2012

2,Smith,25.25,1/4/2012

3,Jones,11,1/5/2012

4,Kumar,334.3,1/6/2012

5,Yung,1000.2,1/7/2012

6,Basero,1,1/8/2012

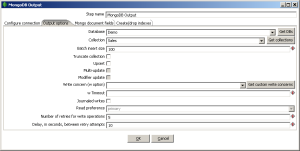

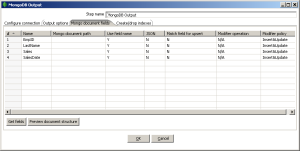

Straight-forward comma-separated value strings with a header that includes Employee ID, last name, sales amount and sales data fields. I connect that CSV text file input step to the MongoDB output step to insert those as data documents into Mongo. What you’ll see in the next 2 configuration screens below are the different tab options in the MongoDB output step dialog from PDI Kettle. I am pointing to my local MongoDB instance database Demo and collection Sales. I define the fields that will map to the input fields coming from the CSV text file. Mongo will use these names to build the document in the data store for me. As a data engineer, not a Java programmer, I love this because this allows me to use an ETL data intergrator using the terminology that I understand and to easily move data into my NoSQL data stores:

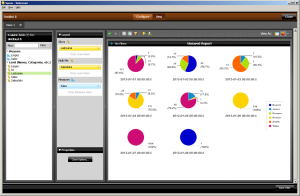

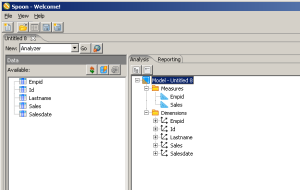

Great, I have data in Mongo. Now let’s use Pentaho Instaview to quickly generate a report by taking that same data, staging it in an in-memory database and then put an analysis model around it for OLAP on Mongo that will look like this:

Pentaho creates the PDI transformations for you to report on the Mongo data and also generates an automatic multidimensional model in Mondrian:

You can edit and modify that model and create any visualization in the Analyzer tool that you like. It doesn’t matter that the data was sourced from MongoDB. This enables me to provide BI and analytics on MongoDB or other NoSQL sources, without needing to relearn how to engineer a BI solution. I’m just using my business analytics tools, regardless of the backend system types.

One more thing, when using Instaview to analyze data from MongoDB: make sure you’ve selected the MongoDB source and then point to your collection. Instaview generates the PDI transformation for you and use the power of the platform, which includes the ability of the Pentaho interfaces into Mongo to discover the schema metadata. Notice in this screen in Instaview, we see a Kettle transformation that includes a button to the get the fields from the MongoDB collection:

You should be able to use this in your environment as a way to built data integration and reporting with MongoDB as a source, very similar to how you do things today with a traditional RDBMS backend, enabling NoSQL as a part of our BI solution.

[…] There are obvious use cases for NoSQL data stores such as caching, storing unstructured data, log events, etc. And so it makes sense to move or create apps that may have been using MySQL or any other lock-based serializable ACID RDBMS.But you also limit yourself to the tools available for input, update and retrieval. And since I’m not here to write Java or Javascript to get or put data into a data store, I greatly appreciate tools that make interacting with a NoSQL data store like MongoDB easy and familiar to my SQL-ized brain. Many BI, OLAP and ETL tools just don’t speak to NoSQL data stores. That’s all that I had to do to set up my data store in Mongo. The rest was done in Pentaho. But for now, it’s time to get a spreadsheet of data from my sales.csv into Mongo using the PDI tool from Pentaho. I will first create a transformation (the T in ETL): […]

[…] I can still clearly remember when object-oriented programming started to become the standard model, moving away from structural procedural code. I used to think to myself that new programmers, just… […]

[…] What I really like about the way that Pentaho’s tools work with MongoDB is the way that it queries the data store’s metadata to fill in the database and collection information from the source. I wrote up a bit more about it here at this posting on KromerBigData about MongoDB with Pentaho tool…. […]

Hi i have a few questions. Please help me:

1) To Do OLAP on Mongo i am unable to find the administration button from where we select MDX.

2) Can we do MDX on Hadoop as well.

3) can we integrate this and call this job using java ?

4) And when i am transforming my data using kettle i am getting an error:

Unable to load data cache.

Confirm input settings in the configure panel.

Thanks for sharing your ideas. May I ask you ask you for some help with the following question? Im trying to compare the BI tools and Pentaho is definitely a very strong candidate in the market. Related to this post, what do you think is the value of a native integration to MongoDB as opposed to an ODBC connection?? thanks in advance.

The Pentaho approach to Analytics on MongoDB is native API access as opposed to a SQL connector like ODBC or JDBC. Talking natively to MongoDB means that the connection will be faster and the end-user experience is much better than going through an ODBC or JDBC layer.

thanks brother. it’s tutorial can help me.

but in here i have a problem. I wiil be create ware house with pentaho and that can tranformasi or terintergrasi from many mysqldb to mongo db.

how i can do it.

thanks brotr.

i from indonesia.

nice to meet u. thanks . ..:)

Thank you for showing us the information on Pentaho and how to Move Data Through MongoDB.

Get some more details on Pentaho Consulting