Azure Data Factory’s Mapping Data Flows have built-in capabilities to handle complex ETL scenarios that include the ability to handle flexible schemas and changing source data. We call this capability “schema drift“.

When you build transformations that need to handle changing source schemas, your logic becomes tricky. In ADF, you can either build data flows that always look for patterns in the source and utilize generic transformation functions, or you can add a Derived Column that defines your flow’s canonical model. Let’s talk a bit about the pros and cons of each approach:

Generic pattern-matching transformations

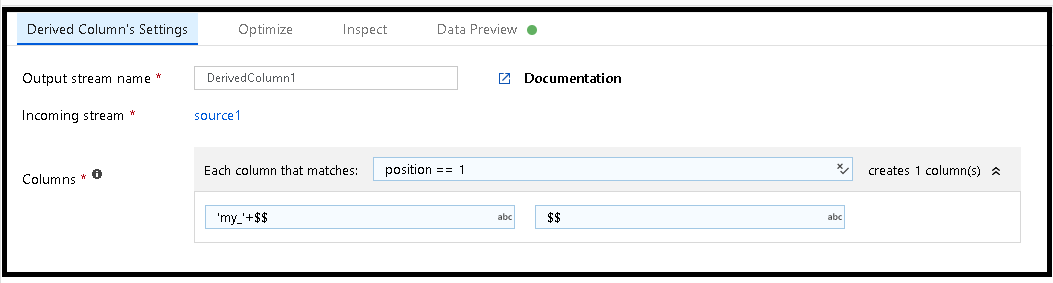

With this approach, you will build generic data flows where transformations use column patterns to match columns and perform actions on each column that matches that pattern.

With this approach, you would add column patterns to Derived Columns, Select Transformation, Aggregates, etc. and look for columns based on column names, ordinal position of columns, column data types, and streams in your data flow.

In the example above, we’re matching whatever column happens to come into the data flow from that particular source file, that is in position 1. We’re then creating a new column called “my_” concatenated with the name of the column that is in column position 1. The value for that column is represented with $$ and all we’re doing, in this case, is just keeping that original value, no transformations in this Derived Column example.

You can build complex data flows this way, but there are 2 important issues that you’ll need to be aware of when designing your logic:

- The new column is not propagated throughout the rest of the data flow in the UI. That means that you cannot inspect it or call it by name. In order to address “my_col” by name, you’ll need to explicitly use the byName() function.

- There is no logical “column projection” like you have with a well-defined schema. With static schemas, you can identify this projection in the Source Projection tab, but not for drifted columns.

Canonical model approach

Another approach is using a built-in facility for schema drift handling from the Data Preview tab in ADF Data Flows. Go to your Source transformation for a file source and click “Map drifted“. This will automatically create a complete canonical model for you with your drifted columns using a Derived Column transformation so that those fields can be referenced in your expressions. Note that drifted fields (fields discovered by ADF that are not included in the dataset schema definition) are indicated with a drifted icon:

You’ll see a new MapDrifted transformation has been created for you automatically that serves as your projection for those drifted columns. ADF will automatically create the byName() mappings for you and you can open the Derived Column transformation to modify your model.

This is very useful for designing data flows that require inspection of columns and requires a base set of columns (canonical model) as part of your logic. You can now use these columns with Intellisense in the Expression Builder, Inspect pane, etc.

This is also a very useful feature when working with the Pivot transformation in ADF Mapping Data Flows. The Pivot transformation can create brand new column names based on row values. Since those are dynamic column names, they are not defined as part of the original dataset projection, so they are treated as “drifted” columns by ADF.

You can click on Data Preview in your Pivot transformation, click Map Drifted, and now all of your new column names can be referenced downstream in your data flow. In the Pivot examples below, I’m creating new columns based on each genre row value from my Movies dataset. Notice that each new dynamic Pivot column is treated as “drifted”.

When I click on Map drifted, ADF creates a Derived Column for me so that each column is made to be part of my projection and I can now see the new fields in my Inspect metadata tab:

[…] Mark Kromer walks us through two techniques we can use in Azure Data Factory to deal with schema dri…: […]

Hi Mark,

thanks for sharing all the infos/blogs about ADF & Azure SQL. Great stuff!

I have a question regarding the topics “Schema Drift” and Length of String Data (in particular nvarchar in a SQL DWH sink).

I want to to copy multiple/dynamically-set tables from a schemaless- AzureSQL-DB Source

(allowSchemaDrift: true,validateSchema: false,inferDriftedColumnTypes: true) to

a schemaless Azure SQL DWH Sink(allowSchemaDrift: true,validateSchema: false) with Staging option in Blob Storage=True

Initial setup: All tables exist in source, No tables in sink.

First try: Tables are created in Sink and also have the right datatype + precision for decimals, however all string values are converted/stored as nvarchar(max)…

I want to limit the length to either the original lenght or a fix value like NVARCHAR(255)

Second try:

–> Derived columns with pattern rule: Each column that matches: type==’string’

$$ —-> left(toString($$),255)

–>Strings are truncated at 256 character but length of data field is still nvarachar(max)…

How can i control the length of the string/nvarchar(columns) in the sink?

Thanks for your support,

Best regards,

Luca

You don’t set that in the Sink. Set the max length of strings in a Derived Column. Set the name of your derived column to the same name as the string you need limit. Use the expression left(mystring,maxlength). Or set this for all string columns with a single rule using a column pattern. More here: https://www.youtube.com/watch?v=sPpcSiKQz34