When you are designing your mapping data flows in ADF, you are working against a live Azure Databricks Spark cluster. The size of that cluster is configurable via the Azure Integration Runtime. If you do not configure a custom Azure IR, then you will use the default Azure IR. That sets a very small cluster size by default of 4 cores for a single worker node and 4 cores for a single driver node. In most cases, while debugging and using data preview, that should be fine. But when you start exploring your data with column statistics or increase the sampling size in debug settings, you may find that you’ve exceeded the capacity on that small default cluster. Below are the steps you need to take to increase the size of your debug cluster.

- Create a new Azure IR by going to Connections > Integration Runtimes and click +New.

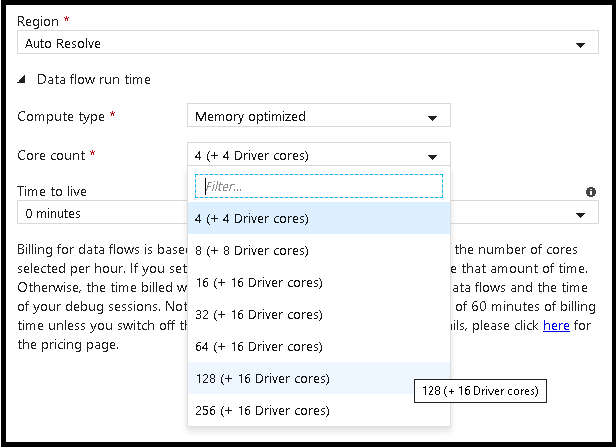

- Choose Azure integration runtime and then expand the Data flow runtime settings.

- Here is where you can choose a larger cluster size as well as a cluster compute type that better matches the type of data flow that you are building (i.e. a memory-intensive flow).

- The Azure IR settings are just configurations that ADF stores and uses when you start-up a data flow. No cluster resources are provisioned until you either execute your data flow activity or switch into debug mode.

- Note that the TTL is only honored during data flow pipeline executions. The TTL for debug sessions is hard-coded to 60 minutes.

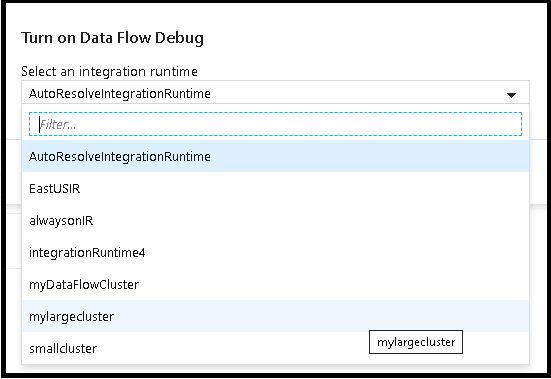

- Now when you switch on the Data Flow Debug switch, you will be prompted for an Azure IR. This is where you can choose a larger size cluster for this debug session:

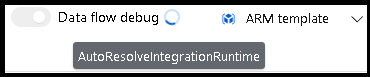

- Once your debug session starts-up you can check to see which IR is being used by hovering over the Data Flow Debug button.

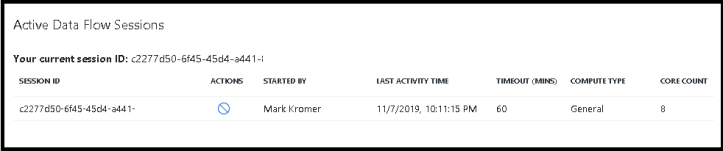

- You can also view which IRs are being used by any active debug session in your data factory by clicking on the play button icon on the top-right to view active sessions and the cluster sizing being used:

- This gives you control over the size of the compute environment for your debug session as well as visibility into all debug sessions on your factory. You can also kill or cancel sessions from this screen.

[…] Mark Kromer takes us through debugging Azure Data Factory Data Flows: […]

when we choose autoresolve IR..what compute type it uses? memory optimized or general purpose or compute optimized>?

General purpose

Hi, have been following your post and learnt a lot. I am trying to do an incremental update from blob storage files to azure sql db. I couldnt find anything on internet regarding this. only way i see is to add a staging table in sql db before doing an incremental update.

if there is a lot easier way to do maybe using data flow, then I would request you to show us how to do that.

Incremental pattern in ADF: https://docs.microsoft.com/en-us/azure/data-factory/tutorial-incremental-copy-overview

Detect changed data in ADF: https://www.youtube.com/watch?v=CaxIlI7oXfI